You have /5 articles left.

Sign up for a free account or log in.

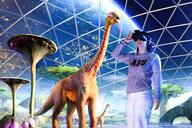

Rahul Divekar, a Rensselaer computer science graduate student, demonstrates an AI-assisted Mandarin Chinese language-learning aid under development at the Cognitive and Immersive Systems Lab at RPI.

Rensselaer Polytechnic Institute

At Rensselaer Polytechnic Institute, students are immersing themselves in Chinese culture without setting foot outside their classroom.

The Mandarin Project, a collaboration between RPI, located in upstate New York, and the tech giant IBM, places students in a virtual world where they can practice their Mandarin language skills in a series of simulated scenarios, such as ordering lunch in a restaurant or taking a tai chi class.

The project aims to make students feel as if they are actually in China, without the inconvenience of traveling there, says Helen Zhou, assistant professor of communication and media at RPI, who has been actively involved in designing the project.

In a high-tech "cognitive immersive room," a classroom with a 360-degree floor-to-ceiling screen, students can practice their Mandarin with artificial intelligence-powered animated characters (including a floating panda head). The CIR combines several emerging technologies -- natural language processing, speech-to-text and movement tracking -- to create a unique learning experience, said Zhou.

Talking to a computer, rather than a native speaker, can make learners feel less embarrassed about making mistakes, said Zhou. They also get much more accurate feedback, she said.

“When students practice speaking with native speakers, nobody tells them how they did,” said Zhou. With the AI technology, students get immediate feedback and are coached on correct pronunciation and sentence structure.

Because the technology is so new, there are limitations, said Zhou. For now, the number of scenarios available to practice with is limited. Only one student can speak at a time because the technology requires students to use a mike. And while the computer voice has perfect pronunciation, it doesn’t exactly sound natural.

“It’s still experimental,” said Zhou.

Computerized Teaching Assistants

Zhou is just one of a growing number of professors experimenting with AI. At the Georgia Institute of Technology, Ashok Goel, professor of computer and cognitive science, has been working with virtual teaching assistants for several years. His most famous AI-powered assistant, Jill Watson, was built on IBM’s Watson platform. But Goel says his team has since developed its own technology and no longer relies on IBM’s Watson.

In 2016, Goel made headlines after revealing that some of his students (in a master’s-level online course in AI) were unable to distinguish between AI and human TAs answering questions in a discussion forum. Now, two and a half years later, he said his students are “pretty good at figuring out what is AI,” though they still sometimes get “false positives.”

AI teaching assistants have now been used in an undergraduate computing course at Georgia Tech and could expand to more colleges and universities, said Goel. Institutions all over the world have been asking for custom AI TAs, but creating them is still too labor intensive, he said. It used to take 1,000 hours to create and train an AI TA. Now it takes 200 to 300 hours, but he thinks his team could get it down to 15 hours.

“The interest has been huge,” said Goel. “Maybe in five years’ time we can do it for everyone.”

The AI TAs can’t answer deep questions about content -- only human teaching assistants can do that -- said Goel, but the AI TAs are useful “because students tend to ask the same questions again and again.” Questions about assessment or deadlines are easily handled by AI, he said.

Aside from developing AI-powered TAs, Goel’s team has been busy creating new AI applications, including Vera -- a Virtual Ecological Research Assistant that will help biology researchers easily model different ecological scenarios, such as the impact of increased pollution on the U.S.’s starfish population.

Goel's team is also thinking about AI’s role in tutoring students, helping them identify the right literature for research and even guiding them to turn their ideas into successful start-up companies.

“That last one is the most far out,” said Goel. “We only have a simple prototype working.”

Robo-Grading Concerns

AI-assisted grading is one area that Goel’s team has deliberately avoided.

“We need to be doing things that industry is not doing,” he said. Companies such as Pearson are already working on technology to grade essays and math problems using AI -- an endeavor that has concerned some academics. But Milena Marinova, senior vice president for AI products and solutions at Pearson, has said these tools are intended to help instructors do their jobs better, not put them out of a job.

“AI-assisted decision making is better than human alone,” she said.

Isaac Chuang, professor of physics and senior associate dean of digital learning at the Massachusetts Institute of Technology, said his institution has investigated AI grading thoroughly in the past, but decided not to use it.

“We’ve been through many meetings; this kept being brought up again and again,” said Chuang.

He said it “still doesn’t work very well” for anything more in-depth than check-box questions but notes that the technology “has grown a great deal” in recent years.

Student essays help instructors understand how students think and how they express themselves, said Chuang.

“AI didn’t do well in grading the things that mattered most to us in essays,” he said.

Connecting Students

One potential use of AI is connecting students with each other, said Chuang. In one experiment, MIT is pairing current computer science students with alumni, so the alumni can offer feedback on the students’ coding skills -- something that would be “very hard for a computer to do,” he said. With the right statistics about a students’ interests and needs, AI could “glue social networks together,” Chuang said.

Universities are also using AI to help students connect with their institutions. Andrew Magliozzi, co-founder and CEO of AdmitHub, builds AI-powered chat bots for institutions, such as Arizona State University and California State University at Northridge, that can be used to recruit and enroll students, as well as retain them.

The chat bot, branded as a university mascot, communicates with students via text rather than through an app. Students are told that the chat bot is AI, which has had some “interesting benefits,” said Magliozzi.

“We find students have a higher willingness to be candid with these bots,” said Magliozzi -- especially when discussing personal issues such as health, finances, immigration status or family issues.

“Students don’t feel judged,” he said.

Universities sometimes forget that they use a lot of language and acronyms that could be confusing to students, said Magliozzi. They might also feel embarrassed asking a university staff member simple questions such as "What’s FAFSA?" he said, referring to the Free Application for Federal Student Aid. When the AI can’t answer a student’s questions or detects that a sensitive issue, such as bereavement, is being discussed, it tells the student it is going to get a person to help.

“The AI is not flawless,” said Magliozzi, and “topics of conversation are limited.”

Tech-Enhanced Studies

Meanwhile, business schools are using AI to help teach students how to analyze business data and make predictive models, without the need to first learn how to code. DataRobot, a platform that aims to make predictive analytics accessible to “almost anyone,” is part of the curriculum at 40 M.B.A. programs, including the Harvard Business School.

John Boersma, director of educational services at DataRobot, explained that students in M.B.A. programs are often interested in data science but lack the technical skills to create predictive models themselves. With DataRobot, students can make predictive models with ease, said Boersma. Universities are even starting to use DataRobot internally to model student behavior, he said.

At Carnegie Mellon University, AI is being used to encourage students in a computer science program to collaboratively develop cloud computing software and tools. By detecting both positive and negative collaborative behaviors, an AI conversational agent can steer students to the right support to help them overcome hurdles in their group work.

Carolyn Penstein Rosé, a professor at Carnegie Mellon's Language Technologies Institute and the Human-Computer Interaction Institute, who leads the project, said her team had to work to ensure that the AI is not seen as intrusive by students. “We want to emphasize and encourage students to talk with each other, not give the agent a core role,” she said.

AI could be used to address a perennial concern for instructors who assign group work, said Rosé -- getting the balance right between productivity and learning. If you emphasize productivity too much, you might stifle conversation and exploration of ideas that help students learn. But if you overencourage conversation, then projects might move forward too slowly, said Rosé. Using AI, Rosé believes her team could find the optimum balance.

Carnegie Mellon’s AI-powered conversational agent will soon be ready for industry deployment and could have some interesting applications in higher ed. Rosé’s team is already working with Western Governors University to develop a conversational agent for student career counseling.

“We’re currently focused on analyzing the huge amount of data they’ve been collecting,” said Rosé. “This is just the beginning.”

Monitoring Behavior

When grade school teachers are training, they often have to film themselves interacting with students in the classroom as part of their studies. Jake Whitehill, a professor of computer science at the Worcester Polytechnic Institute, in Massachusetts, says hiring observers to watch and assess this footage is expensive. He believes having the footage assessed by AI would be cheaper and more effective.

Whitehill said his platform, which is expected to be piloted in 2020, will give the trainees frequent and detailed feedback on their interactions with students. Monitoring the facial expression of students, the technology will be able to pick up on subtle interactions that could indicate a positive or negative learning environment.

“There is the possibility for students to opt out of being filmed,” said Whitehill. “But parents almost never opt out.”

The technology could potentially be applied to lecture halls, and indeed, something similar is already being tested at the University of St. Thomas in Minnesota.

Analyzing video with AI can also be used to catch students who cheat on tests, said Michael London, CEO of exam proctoring company Examity. By analyzing data from thousands of test takers, Examity has been able to pinpoint behaviors linked to cheating such as heads turning or audio levels rising. Faces can be matched against IDs to ensure that students are who they say they are. And unusual behaviors, such as finishing a two-hour test in two minutes, can be picked up.

“Humans are always going to be better proctors than machines, but AI is an advantage,” said London.